Table of Contents: Posts Summary

What have I posted so far, what's next, and what do you want to see next?

As the number of posts grow on my Substack page, I think it’s a good idea to make a Table of Contents summary. Not only for reader convenience, but also because I would love to hear from my audience on what topics you want to see covered next.

💬 Please drop a comment.

[+] Monthly Literature Reviews

[+] Model Surveys

Overview: ML Models for Computational Fluid Dynamics Simulation

CAE: Landscape of AI Models w.r.t. GenAI (not considering LLMs, agents, or copilots here)

[+] Guidance on Specific Topics

Cookbook: Making Small Dataset Projects Successful (CFD, FEA, …)

NVIDIA Part 1: Code, Tutorials, Papers, & the Conference You Should Attend

NVIDIA Part 3: Explaining their latest and greatest ML Surrogate for CFD

Learning Pathway Pt 1: AI/ML Learning Pathway for us Mech./Aero Engineers

Learning Pathway Pt 2: Datasets, Hands-on, ML libraries, ...

[+] Deep Dives

[+] Tutorials

Back to Basics- code & writeup for practical hyperparameter tunning exercise

Code Examples: AI-Based Sampling Approaches (Cookbook Part 2)

Monthly Literature Reviews

I have a goal of reading 1,000 publications on SciML topics by December 31, 2025. Each month, I will make a post to cover what I read that month towards such goal. In such posts, I cover literature on different clusters of topics as well as ‘what was published this month’.

January Literature Summary w/ PDFs

My PhD advisor left a lot of impressions on me, one of which being the importance of routinely reading literature on your subject matter. At the time, the portrayal was that your propensity to produce quality research/performance in the lab was mainly limited by your lack of time spent reading scientific publications. I try my best to still carry this lesson forward, despite that now I am far from working in a lab in graduate school. I feel it’s vital to stay reading; whether it’s to keep up to date with innovations in AI/ML, or whether it’s to dive deep in some fundamental concepts to ensure you have a proper understanding.

February Literature Summary w/ PDFs

In January I set out on an ambitious goal - read 1,000 publications on this specific topic in 2025. Well, I am not very good at math, but that’s about 83 publications per month, for which I am mostly on track.

Model Surveys

Everybody loves a good overview. There is an overwhelming amount of methodologies out there, so it’s great to try to break things down and categorize.

Overview: ML Models for Computational Fluid Dynamics Simulation

Often times, I find formulating the problem clearly can take as much or more time than actually solving it. If you have been out of the loop on AI/ML developments in the simulation space (SciML), it …

CAE: Landscape of AI Models w.r.t. GenAI (not considering LLMs, agents, or copilots here)

I’m a broken record - I spend my midnight hours writing to you in order to help sift through the cloud of hype/non-sense that gravitates around the very real value AI/ML can bring the CAE (simulation) engineering community. I shouldn’t even say ‘can bring’, since it’s already being used in industry for >2 years (and no, not just research teams in exploratory investigations for new methods).

Part 1: AI/ML in Solvers (CFD, FEA)

Let’s make a survey of use cases for how AI/ML is being used in our CFD and FEA numerical frameworks. All the time we see publications for new AI/ML models that emerge, but we want to go beyond scenarios where we train models on bodies of pre-prepared simulation data that are used for making predictions thereafter as a surrogate to additional simulation. With the ever-increasing need for higher fidelity simulation in more accessible manners, as you can get a sense from the image below [1] (figure 1 from the “CFD vision 2030“ roadmap), we

Guidance on Specific Topics

AI/ML in CAE: Job Postings, Career Advice, & Growth Talk

I took a variety of those ‘what color is your parachute’ type tests growing up, and leadership was always among the highest ranking things that resonated with me. Upon self reflection, probably a big…

NVIDIA Part 1: Code, Tutorials, Papers, & the Conference You Should Attend

Part I: I want to make you aware of the NVIDIA GTC conference with some practical details/tips (how to register, talks of interest, notes). Note it starts Monday March 17th.

NVIDIA Part 3: Explaining their latest and greatest ML Surrogate for CFD

I have a handful of favorite ML surrogates for CFD/FEA, and this one makes the list. However, upon reading the paper for the first time, I didn’t fully understand the architecture (the components, wh…

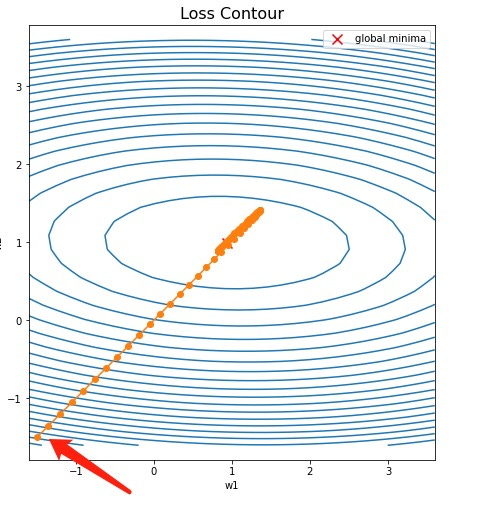

The Adam Optimizer - Conceptual Introduction

Stochastic gradient-based optimization is of core practical importance in many fields of science and engineering. Many problems in these fields can be cast as the optimization of some scalar parameterized objective function requiring maximization or minimization with respect to its parameters [1]. You may be familiar with the related framework in engineering optimization projects for design work;— for example, I want to maximize the lift of my airfoil while minimizing the drag, through variation of parameter values that specify the geometrical design of my airfoil.

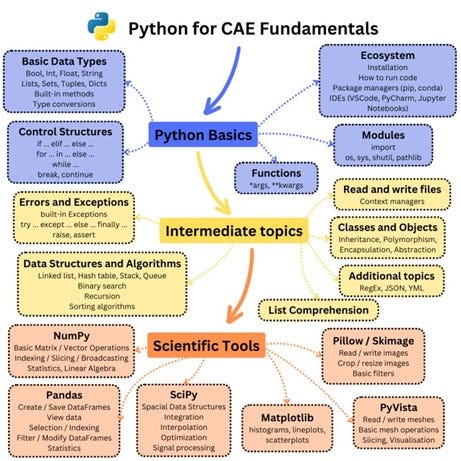

Learning Pathway Pt 1: AI/ML for us Mech./Aero Engineers

Thanks for reading AI/Machine learning in fluid mechanics, engineering, physics! This post is public so feel free to share it.

Learning Pathway Pt 2: Datasets, Hands-on, ML libraries, ...

Thanks for reading AI/Machine learning in fluid mechanics, engineering, physics! This post is public so feel free to share it.

Deep Dives

The Attention Mechanism

Attention Mechanisms for CFD/FEA - Part 1

Even back in 2019 I was obsessed with the idea that machine learning’s ability to learn complex non-linear patterns could be leveraged in full 3D engineering simulation to better understand the physics we were modeling. This image below was from a film cooling (jet-in-crossflow) simulation CFD simulation I did, whereby identifying turbulence structures was implicit to understanding the resulting heat transfer.

Technology readiness levels for machine learning systems

By now, you’ve probably seen very compelling demonstrations of AI/ML in simulation projects. However, many such compelling projects don’t leave the Python sandbox and make their way into industry pro…

Parsimony in Scientific Machine Learning

Thanks for reading! We had a previous series (two parts so far) kick off on how to successfully manage your way in projects where data was limited. To compliment this, let’s talk about models that carry a similar ‘minimalist’ attitude; more simple models, hopefully with explanations when possible, rather than super complex ones. For some problems, the complex ones are the superior choice. But as often as we can, it’s useful to use simple models (at least as a means for comparison and interpretability).

Tutorials

Install-Free Code Tutorial: PINNs in turbulent flow

This blog will cover the following for a physics-informed neural networks (PINNs) project:

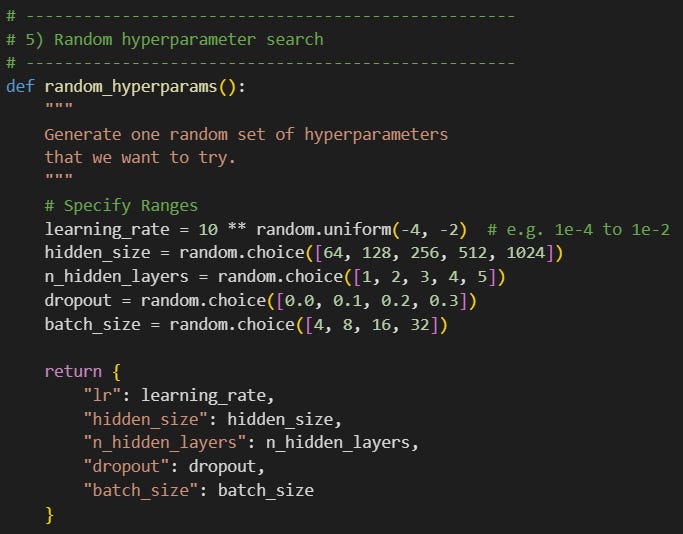

Back to Basics- code & writeup for practical hyperparameter tunning exercise

This is a very common situation - you pickup some new project and identify the architecture you think will work best (e.g. vanilla neural network, XGBoost, 3D VAE, PCA+NN, whatever). But then you have a huge number of possible combinations (hyperparameters) pertaining to the model setup to pick from. How can we efficiently navigate this process? As I am writing this, I sense this may become the first part in a series of posts on this topic. But let’s take a practical start.

Code Examples: AI-Based Sampling Approaches (Cookbook Part 2)

Let’s cut to the chase - our simulations are expensive and if we want to train a machine learning model on multiple of them, it would be ideal to need as few as possible. Further, it would be good to have some common sense logic used to confidentially pick which simulations to run as we build-up our dataset, rather than something less ideal like randomly picking samples (that doesn’t give us confidence our model can be trusted when we go try to use it for inference after training).