NVIDIA Part 2: Papers galore and conference takeaways

Tutorials, Overview of the 'Model Zoo', Publications, and Industry Insights

In this blog I will:

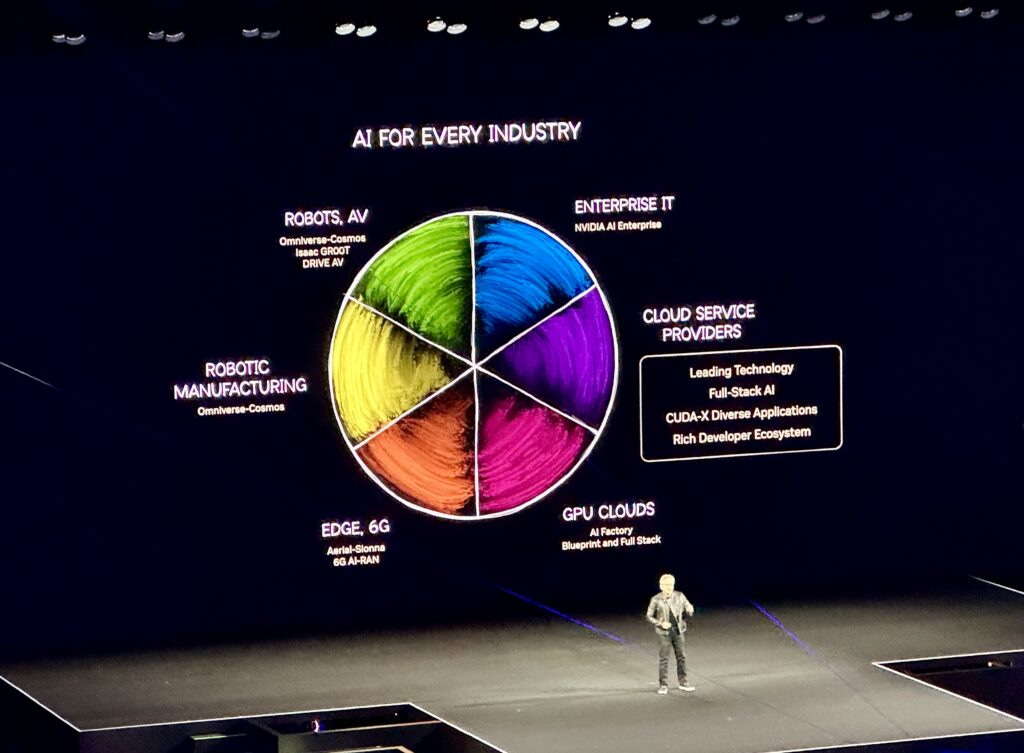

Share some take-aways from the NVIDIA GTC conference

Overview many of the models available in NVIDIA’s PhysicsNeMo; their deep learning framework for applications in physics and partial differential equations (PDEs)

NVIDIA GTC Conference Notes of Interest

Just wanted to dump some updates and take-aways from the event. It’s impossible to keep up with everything, so here’s a first pass.

Why is MotorTrend at the event? Future of cars appears to be autonomous vehicles, and we see many car companies there showing off their wares (Ford, Lucid, Mercedes Benz, JLR, Volvo, …)

On that note; GM partners with NVIDIA for autonomous driving. Focused on manufacturing, design, simulation, and infrastructure within vehicles themselves.

Finally gave a name to project ‘digits‘ → DGX Spark. A mini AI-super computer with 128GB memory and 1 petaflops of compute. As well as DGX Station, which extends that to 20 petaflops

Many cute robots that did things like bounce like bunnies and doggies, Star Wars looking droids (we saw Disney Imagineers there), vacuum, cooking in the kitchen, and much more. Motor

AI Factories. New operating system for AI factories, called Dynamo (efficiently hosting things like AI agents). Announcement of launch of ‘Rubin chip family’. Not just faster GPUs, but designed to power AI factories. Gosh, have a feeling we are going to hear that term a lot in coming years. My understanding of the term ‘AI factory’ is a datacenter that’s not meant to host data or apps or serve websites, but to generate intelligence.

Autonomous food/snack delivery robot provided by Nuro

I saw a stat that was cool and I wanted to share - a tally of how many sessions there were per industry segment. Warning: I did not vet these numbers. Cool data to share people want to get into AI but have trouble picking which industries to funnel into.

All industries: 536

Healthcare/Life sciences: 112

Automotive/Transportation: 64

HPC/Scientific Computing: 61

Cloud services: 54

Manufacturing: 53

Lastly, I really liked the overview ReScale provided. Rather than paraphrase it, I will just point you to the blog they wrote and leave a few line-items below.

Announcement of NVIDIA’s Blackwell GPUs marks a significant leap forward in compute capabilities. With up to 150x more compute power, Blackwell promises to unleash new possibilities for AI, simulation, and data processing

NVIDIA CUDA-X updates

Discussion on ‘growing convergence’ of quantum computing and HPC

Rescale Announces CAE Hub Powered by NVIDIA

Other Notes of Interest

NVIDIA released a new model, DoMINO just before GTC — it is a great model, and while I still have my other preferred go-tos, I think it’s an awesome read and model to consider using in your work.

Abstract [Source]

“Numerical simulations play a critical role in design and development of engineering products and processes. Traditional computational methods, such as CFD, can provide accurate predictions but are computationally expensive, particularly for complex geometries. Several machine learning (ML) models have been proposed in the literature to significantly reduce computation time while maintaining acceptable accuracy. However, ML models often face limitations in terms of accuracy and scalability and depend on significant mesh downsampling, which can negatively affect prediction accuracy and generalization. In this work, we propose a novel ML model architecture, DoMINO (Decomposable Multi-scale Iterative Neural Operator) developed in NVIDIA Modulus to address the various challenges of machine learning based surrogate modeling of engineering simulations. DoMINO is a point cloud-based ML model that uses local geometric information to predict flow fields on discrete points. The DoMINO model is validated for the automotive aerodynamics use case using the DrivAerML dataset. Through our experiments we demonstrate the scalability, performance, accuracy and generalization of our model to both in-distribution and out-of-distribution testing samples. Moreover, the results are analyzed using a range of engineering specific metrics important for validating numerical simulations.”

We are in the era where all kinds of startups, independent researchers, and engineers in the field are hearing the term ‘foundational models’. As in, pre-trained models that are handed to practitioners, ready to go for prediction, which supposedly are accurate over a wide range of diverse problems without the need for more training or tuning. For example, an aerospace foundational model that can accurately prediction flow fields over ‘any’ airfoil in ‘any’ operating state out of the box. These are wild claims, which I label as ‘hype’ or ‘buzz’ that cloud and follow any promising new technologies. You’ll notice that almost always these statements are not spoken by authors of these sorts of publications.

Anyways, I wanted to clarify this alongside stating that these authors clarify this is a good pre-trained model to use, but it is not a foundation model. We are in an era where responsible and trustworthy AI models are so essential to their continued adoption, so I wanted to stop this spiral in its tracks.

Luminary Cloud gave a cool webinar which used this DoMINO model. I myself did not learn anything new from it, but maybe you will (and it’s always cool to talk about SciML).

NVIDIA Modulus / NVIDIA PhysicsNeMo

NVIDIA Modulus is now “NVIDIA PhysicsNeMo” (re-branding). Basically, it is an open-source deep learning framework designed for physics-informed machine learning and AI-driven physics simulations (various kinds). It provides tools to build and train neural network models that can learn and simulate complex physical systems (such as fluid dynamics, climate, structural mechanics, etc.). PhysicsNeMo supports a range of model types from physics-informed neural networks (PINNs) that enforce physical laws in the loss function, to data-driven models like neural operators and graph neural networks that learn surrogate models from simulation or experimental data. The goal of PhysicsNeMo is to accelerate scientific computing by blending first-principles physics with state-of-the-art AI, enabling fast and accurate simulations for engineering and scientific applications [reference]. Built on PyTorch, PhysicsNeMo comes with optimized model architectures, utilities for multi-GPU training, and an extensive library of examples and tutorials to help researchers and engineers develop AI solutions for PDE-based problems.

Edit: I am super annoyed to say that all my links broke halfway through writing this blog (hence the reason it was delayed). So, I did my best to go back and add/find new ones, but this was a huge effort so just have some understanding please. NVIDIA if you are reading this please check your links in the documentation (many yield 404s)

Landscape of Available Models

I will now review many different models, one-by-one.