Promising AI Foundation Models in Science: Examples with Code and Data

Papers & Code for Foundation Models in Science

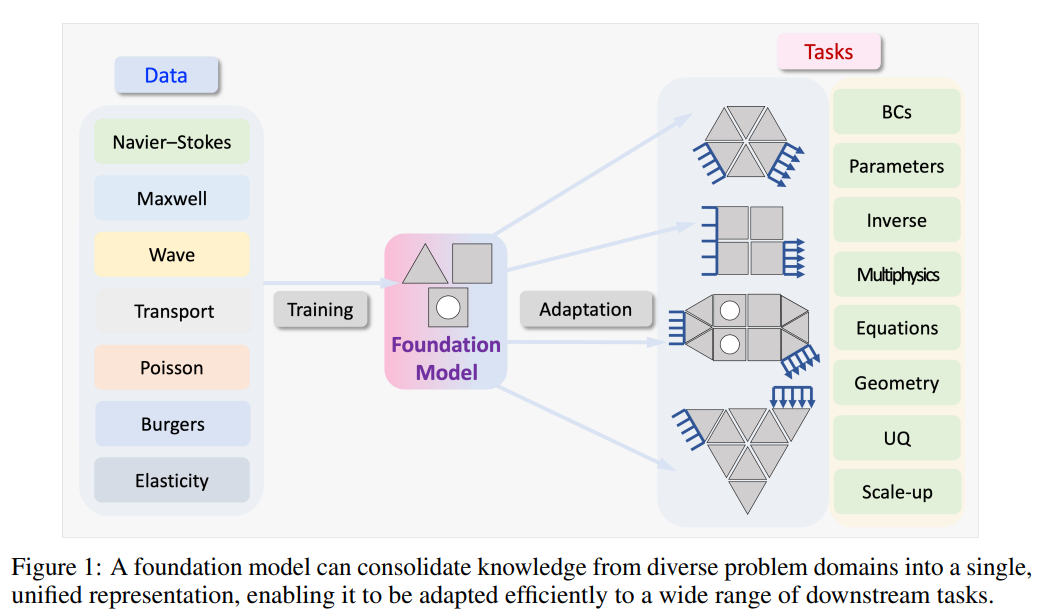

In the most approachable terms, foundation models are a class of AI/ML models that are accurate and useful over a much more broad range of circumstances after training (often called inference). Often, you might have experienced a situation where you (or someone you know) trained a ML model with a certain dataset and the understanding was that the model is only going to give useful predictions on very similar data. Well, if you continue to train different and better AI/ML models until they become accurate over an increasingly different number of problems, well simply put you are moving in the direction ‘towards’ a foundation model (but it’s extremely difficult).

An example to help set the stage for readers before we nerd out about science:

Lets’ say I train a model that can classify ‘dog’ or ‘cat’ when shown an image of dogs and cats. Further, after improvements and intense training on all kinds of different animal images, the model is now able to tell you any animal in any picture you show it.

Several ‘vision models’ are widely treated as “foundational” for image classification because they’re pretrained at scale and then adapted for a wide variety of tasks (often with little or no task-specific data). Examples include BiT (Big Transfer) (reference publication) , CLIP (reference) , and multiple others.

After the overwhelming success of ChatGPT as a foundation model that works with language, whether it be a human or coding language, folks have turned to scientific applications and asked themselves an ambitious question:

Could a machine learning model truly learn a specific area of science?

If so, the possibilities are incredibly exciting as we humans have developed yet another powerful tool to advance our understanding of physics. However, like almost all good things, there has been false marketing by those that are either unaware of how machine learning really works or charlatans. This has brought confusion to the mainstream on an already complicated and (often) black-box technology.

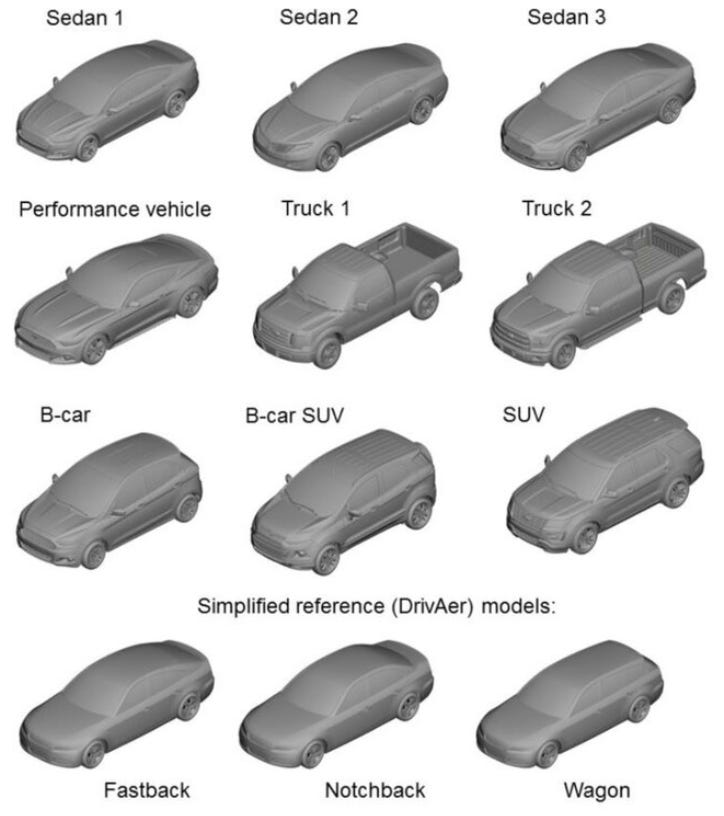

In this blog, I focus on using machine learning models to predict scientific/engineering results and data. However, this is closely coupled to another very important topic that will shape industry in the future: “Industry Foundation Models”, which include models that can speak the language of a certain industry so well that it can dramatically accelerate the workforce (e.g. a faster time to market for a company designing a new car or product). Mainstream language models like ChatGPT fail today when inserted into industry teams for multiple reasons. To explain more about this kind of industrial foundation model, check this post.

I want to do two things here on this topic for the remainder of the blog:

Point you to my past blog post that clarifies the criteria you should use when judging ‘is this a foundation model’? This is extremely important to decipher the real capabilities of AI models (the vast majority are not even close to being a foundation model, and it’s important to recognize this).

Podcast: Defining Foundation Models for Computational Science: A Call for Clarity and Rigor

Enjoy this synthetic podcast on the paper:

(The remainder of this post) I want to share what I believe are examples of actual foundation model candidates for certain specific areas of science. Even my language here is carefully chosen - as the term ‘foundation model’ is immense and challenging to judge unilaterally.

Let’s start the review now of what I believe are good foundation model candidates across science and engineering domains. I loved writing this and finding hands-on code/papers for these, so I assure you there will be a ‘part 2’ on this topic.